With a Cohen's d of 0.8, 78.8% of the ' treatment ' group will be above the mean of the ' control ' group (Cohen's U 3), 68.9% of the two groups will overlap, and there is a 71.4% chance that a person picked at random from the treatment group will have a higher score than a person picked at random from the control group (probability of superiority). Virginia, (1821), U.S. Supreme Court case in which the court reaffirmed its right to review all state court judgments in cases arising under the federal Constitution or a law of the United States.The Judiciary Act of 1789 provided for mandatory Supreme Court review of the final judgments of the highest court of any state in cases 'where is drawn in question the validity of a treaty.

Repeat after me: 'statistical significance is not everything.'

It's just as important to have some measure of how practically significant an effect is, and this is done using what we call an effect size.

Cohen's d is one of the most common ways we measure the size of an effect.

Here, I'll show you how to calculate it. If you'd rather skip all that, you can download a free spreadsheet to do the dirty work for you right here. Just use this form to sign up for the spreadsheet, and for more practical updates like this one:

(We will never share your email address with anybody.)

The spreadsheet will also share a confidence interval and margin of error for your Cohen's d.

The Formula

Cohen's d is simply a measure of the distance between two means, measured in standard deviations. The formula used to calculate the Cohen's d looks like this:

Where M1and M2 are the means for the 1st and 2nd samples, and SDpooled is the pooled standard deviation for the samples. SDpooled is properly calculated using this formula:

In practice, though, you don't necessarily have all this raw data, and you can typically use this much simpler formula:

The spreadsheet I've included on this page allows you to use either formula.

In the first, more lengthy formula, X1 represents a sample point from your first sample, and Xbar1 represents the sample mean for the first sample. The distance between the sample mean and the sample point is squared before it is summed over every sample point (otherwise you would just get zero). Obviously, X2 and Xbar2 represent the sample point and sample mean from the second sample. n1 and n2 represent the sample sizes for the 1st and 2nd sample, respectively.

In the second, simpler formula, SD1 and SD2 represent the standard deviations for samples 1 and 2, respectively.

Now for a few frequently asked questions.

Can your Cohen's d have a negative effect size?

Yes, but it's important to understand why, and what it means. The sign of your Cohen's d depends on which sample means you label 1 and 2. If M1is bigger than M2, your effect size will be positive. If the second mean is larger, your effect size will be negative.

In short, the sign of your Cohen's d effect tells you the direction of the effect. If M1 is your experimental group, and M2 is your control group, then a negative effect size indicates the effect decreases your mean, and a positive effect size indicates that the effect increases your mean.

How is Cohen's d related to statistical significance?

It isn't.

It's important to understand this distinction.

To say that a result is statistically significant is to say that you are confident, to 100 minus alpha percent, that an effect exists. Statistical significance is about how sure you are that an effect is real; it says nothing about the size of the effect.

By contrast, Cohen's d and other measures of effect size are just that, ways to measure how big the effect is (and in which direction). Cohen's d tells you how big the effect is compared to the standard deviation of your samples. It says nothing about the statistical significance of the effect. A large Cohen's d doesn't necessarily mean that an effect actually exists, because Cohen's d is just your best estimate of how big the effect is, assuming it does exist.

(Of course, if you have a confidence interval for your Cohen's d, then the confidence interval can tell you whether or not the effect is significant, depending on whether or not it contains 0.)

Can you convert between Cohen's d and r, and if so, when?

There is a relationship between Cohen's d and correlation (r). The following formula is most commonly used to calculate d from r:

And this formula is used to find r from d:

Where a is a correlation factor found using the sample sizes:

However, it's important to realize that these conversions can sometimes change your interpretation of the data, in particular when base rates are important. You can find an in depth academic discussion of conversions between the two in this paper.

For conversions between d and the log odds ratio, you can also take a look at this paper.

Can you statistically compare two independent Cohen's d results?

Yes, but not at face value, and only with extreme caution.

Remember, Cohen's d is the difference between two means, measured in standard deviations. If two experiments are sampled from different populations, the standard deviations are going to be different, so the effect size will also be different.

For example, you can't compare the effect size of an antidepressant on depressed people with the effect size of an antidepressant on schizophrenic people. The inherent variance of the sample populations are going to be different, so the resulting effect sizes are also going to be different.

Assuming that the experiments were both conducted on the same population, it's still not a good idea to compare Cohen's d results at face value. If one value is larger, this doesn't mean there is a statistically significant difference between the two effect sizes.

The simplest way to compare effect sizes is by their confidence intervals

If the confidence intervals overlap, the difference isn't statistically significant. To find the confidence interval, you need the variance. The variance of the Cohen's d statistic is found using:

You can use this variance to find the confidence interval. You can also use the spreadsheet I've provided on this page to get the confidence interval.

Results Cohen's D Article

Can you calculate Cohen's d from the results of t-tests or F-tests?

Yes, you can. This paper explains how to do that beautifully. If there's enough demand for it, I might put together a spreadsheet for this also.

Want to download the Cohen's d spreadsheet and let it do the dirty work? Sign up here:

In our two previous post on Cohen's d and standardized effect size measures [1, 2], we learned why we might want to use such a measure, how to calculate it for two independent groups, and why we should always be mindful of what standardizer (i.e., the denominator in d = effect size / standardizer) is used to calculate Cohen's d.

But how do we interpret Cohen's d?

First a tangent: bias in Cohen's d

Most statistical analyses try to inform us about the population we are studying, not the sample of that population we happen to have tested for our study. With Cohen's d we want to estimate the standardized effect size for a given population.

If our standardizer is an estimate, which it almost always will be, d will be a biased measure and tend to overestimate our estimate of the population effect size. As pointed out by Cummings and Robert Calin-Jageman in their book Introduction to the New Statistics: Estimation, Open Science, and Beyond, if our sample size is less that 50 we should be reporting d_unbiased. The unbiased version of Cohen's d is often referred to as Hedge's g and can easily be calculated by various statistical packages, including R.

r-based family of effect size values.

There is another family of standardized effect size measures based on r, which is often used in correlation and regression analysis. As explained in this article, eta squared (n-looking thing) is the biased version and omega squared (w-looking thing) is the unbiased version.

Thinking about Cohen's d: Cohen's reference values

Cohen was reluctant to provide reference values for his standardized effect size measures. Although he stated that d = 0.2, 0.5 and 0.8 correspond to small, medium and large effects, he specified that these values provide a conventional frame of reference which is recommended when no other basis is available.

Thinking about Cohen's d: overlap pictures

If we can assume that our data comes from a population with a normal distribution, it is helpful to picture the amount of overlap between two distributions associated with various values of Cohen's d. Below is a figure illustrating the amount of overlap associated with the three d values identified by Cohen (code used to generate figure is available here:

Figure 1: Examples of overlap between two normally distributed groups for different Cohen d values. The mean of the pink population is 50. The standardizer (i.e., the standard deviation) of the between-group difference is 15. Thus, for a standardised between-group difference of 0.5, the between-group difference (effect size; ES) in original units will be 0.5 = ES/15, which gives 7.5. So the difference between the mean of the two distributions is 1/2 a standard deviation, or 7.5 (figure panel 2).

As you can see, there is considerable overlap between the two distribution even when Cohen's d indicates a large effect. This means that even for large effects there will be many individuals that go against the population-level pattern. Always keep these types of figures in mind when trying to interpret effect size measures.

Thinking about Cohen's d: effect size in original units

This is often the first approach to use when interpreting results. The outcome measure used to compute Cohen's d may have known reference values (e.g., BMI) or a meaningful scale (e.g., hours of sleep per night).

Thinking about Cohen's d: the standardizer and the reference population

Results Cohen's D Articles

Cohen's d is a number of standard deviation units. It is important to ask yourself what standard deviation these units are based on. As was discussed in the previous post, if available it is always better to use an estimate of the population standard deviation rather than the standard deviation of the studied sample. If such a value is not available and the sample standard deviation is used, be aware that, as the denominator in the formula, the standardizer can have a large influence on the value of d.

Thinking about Cohen's d: values of d across disciplines

In any discipline there is a wide range of effect sizes reported. However, as highlighted by Cummings and Calin-Jageman, researchers in various fields have reported on what range of d values can be expected.

Cohen's D

Cohen's d is one of the most common ways we measure the size of an effect.

Here, I'll show you how to calculate it. If you'd rather skip all that, you can download a free spreadsheet to do the dirty work for you right here. Just use this form to sign up for the spreadsheet, and for more practical updates like this one:

(We will never share your email address with anybody.)

The spreadsheet will also share a confidence interval and margin of error for your Cohen's d.

The Formula

Cohen's d is simply a measure of the distance between two means, measured in standard deviations. The formula used to calculate the Cohen's d looks like this:

Where M1and M2 are the means for the 1st and 2nd samples, and SDpooled is the pooled standard deviation for the samples. SDpooled is properly calculated using this formula:

In practice, though, you don't necessarily have all this raw data, and you can typically use this much simpler formula:

The spreadsheet I've included on this page allows you to use either formula.

In the first, more lengthy formula, X1 represents a sample point from your first sample, and Xbar1 represents the sample mean for the first sample. The distance between the sample mean and the sample point is squared before it is summed over every sample point (otherwise you would just get zero). Obviously, X2 and Xbar2 represent the sample point and sample mean from the second sample. n1 and n2 represent the sample sizes for the 1st and 2nd sample, respectively.

In the second, simpler formula, SD1 and SD2 represent the standard deviations for samples 1 and 2, respectively.

Now for a few frequently asked questions.

Can your Cohen's d have a negative effect size?

Yes, but it's important to understand why, and what it means. The sign of your Cohen's d depends on which sample means you label 1 and 2. If M1is bigger than M2, your effect size will be positive. If the second mean is larger, your effect size will be negative.

In short, the sign of your Cohen's d effect tells you the direction of the effect. If M1 is your experimental group, and M2 is your control group, then a negative effect size indicates the effect decreases your mean, and a positive effect size indicates that the effect increases your mean.

How is Cohen's d related to statistical significance?

It isn't.

It's important to understand this distinction.

To say that a result is statistically significant is to say that you are confident, to 100 minus alpha percent, that an effect exists. Statistical significance is about how sure you are that an effect is real; it says nothing about the size of the effect.

By contrast, Cohen's d and other measures of effect size are just that, ways to measure how big the effect is (and in which direction). Cohen's d tells you how big the effect is compared to the standard deviation of your samples. It says nothing about the statistical significance of the effect. A large Cohen's d doesn't necessarily mean that an effect actually exists, because Cohen's d is just your best estimate of how big the effect is, assuming it does exist.

(Of course, if you have a confidence interval for your Cohen's d, then the confidence interval can tell you whether or not the effect is significant, depending on whether or not it contains 0.)

Can you convert between Cohen's d and r, and if so, when?

There is a relationship between Cohen's d and correlation (r). The following formula is most commonly used to calculate d from r:

And this formula is used to find r from d:

Where a is a correlation factor found using the sample sizes:

However, it's important to realize that these conversions can sometimes change your interpretation of the data, in particular when base rates are important. You can find an in depth academic discussion of conversions between the two in this paper.

For conversions between d and the log odds ratio, you can also take a look at this paper.

Can you statistically compare two independent Cohen's d results?

Yes, but not at face value, and only with extreme caution.

Remember, Cohen's d is the difference between two means, measured in standard deviations. If two experiments are sampled from different populations, the standard deviations are going to be different, so the effect size will also be different.

For example, you can't compare the effect size of an antidepressant on depressed people with the effect size of an antidepressant on schizophrenic people. The inherent variance of the sample populations are going to be different, so the resulting effect sizes are also going to be different.

Assuming that the experiments were both conducted on the same population, it's still not a good idea to compare Cohen's d results at face value. If one value is larger, this doesn't mean there is a statistically significant difference between the two effect sizes.

The simplest way to compare effect sizes is by their confidence intervals

If the confidence intervals overlap, the difference isn't statistically significant. To find the confidence interval, you need the variance. The variance of the Cohen's d statistic is found using:

You can use this variance to find the confidence interval. You can also use the spreadsheet I've provided on this page to get the confidence interval.

Results Cohen's D Article

Can you calculate Cohen's d from the results of t-tests or F-tests?

Yes, you can. This paper explains how to do that beautifully. If there's enough demand for it, I might put together a spreadsheet for this also.

Want to download the Cohen's d spreadsheet and let it do the dirty work? Sign up here:

In our two previous post on Cohen's d and standardized effect size measures [1, 2], we learned why we might want to use such a measure, how to calculate it for two independent groups, and why we should always be mindful of what standardizer (i.e., the denominator in d = effect size / standardizer) is used to calculate Cohen's d.

But how do we interpret Cohen's d?

First a tangent: bias in Cohen's d

Most statistical analyses try to inform us about the population we are studying, not the sample of that population we happen to have tested for our study. With Cohen's d we want to estimate the standardized effect size for a given population.

If our standardizer is an estimate, which it almost always will be, d will be a biased measure and tend to overestimate our estimate of the population effect size. As pointed out by Cummings and Robert Calin-Jageman in their book Introduction to the New Statistics: Estimation, Open Science, and Beyond, if our sample size is less that 50 we should be reporting d_unbiased. The unbiased version of Cohen's d is often referred to as Hedge's g and can easily be calculated by various statistical packages, including R.

r-based family of effect size values.

There is another family of standardized effect size measures based on r, which is often used in correlation and regression analysis. As explained in this article, eta squared (n-looking thing) is the biased version and omega squared (w-looking thing) is the unbiased version.

Thinking about Cohen's d: Cohen's reference values

Cohen was reluctant to provide reference values for his standardized effect size measures. Although he stated that d = 0.2, 0.5 and 0.8 correspond to small, medium and large effects, he specified that these values provide a conventional frame of reference which is recommended when no other basis is available.

Thinking about Cohen's d: overlap pictures

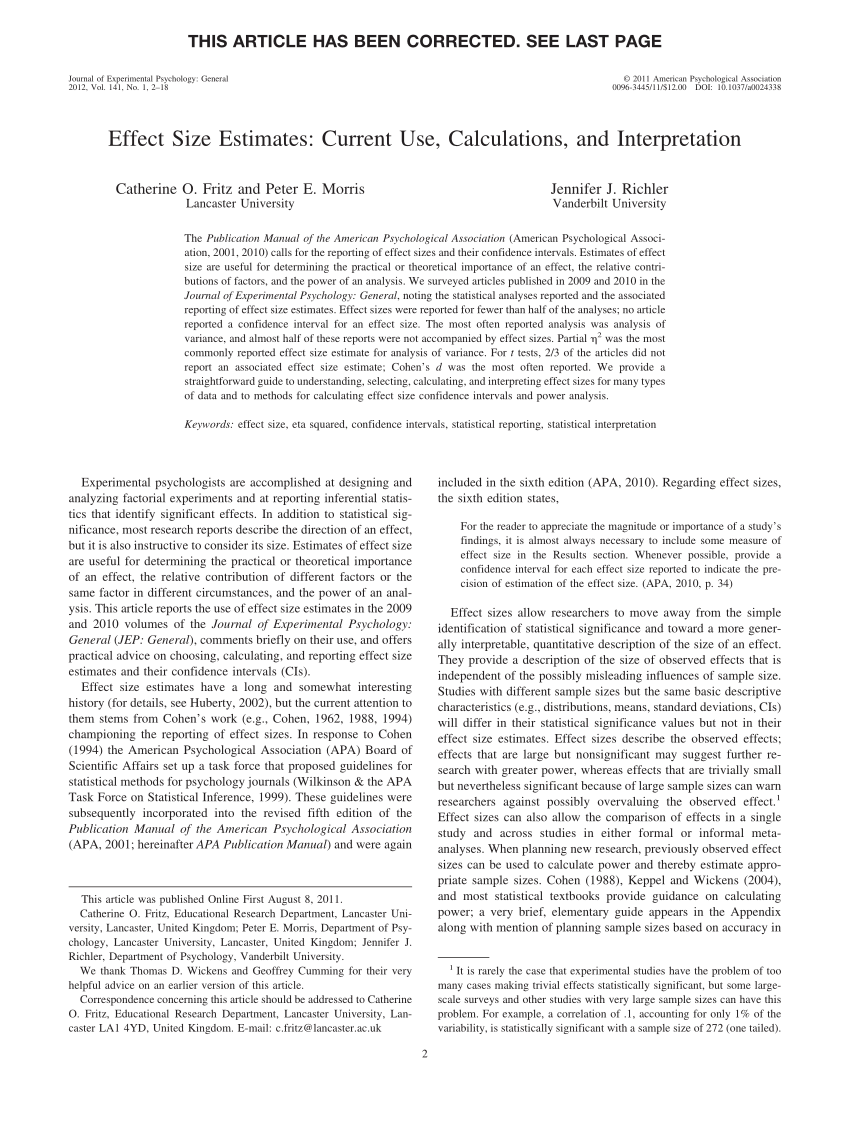

If we can assume that our data comes from a population with a normal distribution, it is helpful to picture the amount of overlap between two distributions associated with various values of Cohen's d. Below is a figure illustrating the amount of overlap associated with the three d values identified by Cohen (code used to generate figure is available here:

Figure 1: Examples of overlap between two normally distributed groups for different Cohen d values. The mean of the pink population is 50. The standardizer (i.e., the standard deviation) of the between-group difference is 15. Thus, for a standardised between-group difference of 0.5, the between-group difference (effect size; ES) in original units will be 0.5 = ES/15, which gives 7.5. So the difference between the mean of the two distributions is 1/2 a standard deviation, or 7.5 (figure panel 2).

As you can see, there is considerable overlap between the two distribution even when Cohen's d indicates a large effect. This means that even for large effects there will be many individuals that go against the population-level pattern. Always keep these types of figures in mind when trying to interpret effect size measures.

Thinking about Cohen's d: effect size in original units

This is often the first approach to use when interpreting results. The outcome measure used to compute Cohen's d may have known reference values (e.g., BMI) or a meaningful scale (e.g., hours of sleep per night).

Thinking about Cohen's d: the standardizer and the reference population

Results Cohen's D Articles

Cohen's d is a number of standard deviation units. It is important to ask yourself what standard deviation these units are based on. As was discussed in the previous post, if available it is always better to use an estimate of the population standard deviation rather than the standard deviation of the studied sample. If such a value is not available and the sample standard deviation is used, be aware that, as the denominator in the formula, the standardizer can have a large influence on the value of d.

Thinking about Cohen's d: values of d across disciplines

In any discipline there is a wide range of effect sizes reported. However, as highlighted by Cummings and Calin-Jageman, researchers in various fields have reported on what range of d values can be expected.

Cohen's D

The mean effect size in psychology is d = 0.4, with 30% of of effects below 0.2 and 17% greater than 0.8. In education research, the average effect size is also d = 0.4, with 0.2, 0.4 and 0.6 considered small, medium and large effects. In contrast, medical research is often associated with small effect sizes, often in the 0.05 to 0.2 range. Despite being small, these effects often represent meaningful effects such as saving lives. For example, being fit decreases mortality risk in the next 8 years by d = 0.08. Finally, effects as large as d = 5 are common in fields such as pharmacology.

Summary

There is no straight forward way to interpret standardized effect size measures. While they are increasingly being reported in published manuscripts, Cohen's d and other such measures should not be glanced over. As pointed out in this and previous posts [1, 2] numerous things need to be considered when interpreting these values.